Impact of Indexes on MySQL Write Performance

This article benchmarks MySQL to show how different index types impact write performance on various columns, highlighting the best and worst column types for indexing efficiency.

Introduction

In our fast pace environment of crypto exchange, write speed to database plays important role. For example, every trade, transaction or even non executed order needs to be written somewhere and for some of these, we use AWS Aurora. If you worked on the field for some time, you know, there is never ending issue with developers requesting an index to this or that column in some table. Adding an index in MySQL does not go without penalty to write performance. Reason is, that because for every database insert, an index needs to be updated too. This means there is more work to do and therefore this impacts write performance.

This is nothing new, but what you might be wondering is, how much does it actually impact performance and which types of columns in MySQL are worse than the other and for how much?

That is the real question.

Battle plan

In order to answer some of these questions, I prepared a small benchmark on db.c5.large Aurora cluster. Nothing special about it, everything default, so in short, ACID compliant database.

Main thing that we want to do is:

Create a table with multiple different types of columns

Pre-fill few million rows of random data

Do a SysBench insert benchmark, to see how everything performs without indexes

Add an index on a column

Repeat benchmark, to see how much that impacted the write performance

Rinse-repeat step 4 and 5 until all of the columns have index

Of course having index on every column makes absolutely no sense from practical standpoint, but we are not trying to benchmark reads. Writes are what we are interested in and how types of indexes impact them.

Actual setup

Table

Note, few other types exist in MySQL, but from perspective of adding an index, it makes no sense to put index on for example “TEXT” type or something similar…

CREATE TABLE benchmark_table (

id INT AUTO_INCREMENT PRIMARY KEY, -- Integer

name VARCHAR(255), -- Variable-length string

birthdate DATE, -- Date

salary DECIMAL(10, 2), -- Decimal for financial values

is_active BOOLEAN, -- Boolean (True/False)

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP, -- Timestamp with auto-update

rating FLOAT, -- Floating-point number

visit_count BIGINT -- Large integer for counters

);SysBench parameters

sysbench \

--db-driver=mysql \

--mysql-host=xxx \

--mysql-user=xxx \

--mysql-password=xxx \

--mysql-db=benchmark \

--report-interval=3 \

--time=120 \

--threads=150 \

./insert.lua runInsert Lua script

function get_conn()

drv = sysbench.sql.driver()

return drv:connect()

end

function thread_init(thread_id)

con = get_conn()

end

function random_string(length)

local chars = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ"

local str = ""

for i = 1, length do

local rand_index = math.random(#chars)

str = str .. chars:sub(rand_index, rand_index)

end

return str

end

function random_date(start_year, end_year)

local year = math.random(start_year, end_year)

local month = math.random(1, 12)

local day = math.random(1, 28) -- To avoid invalid dates

return string.format("%04d-%02d-%02d", year, month, day)

end

function event(thread_id)

local name = random_string(8) -- Generate a random 8-character name

local birthdate = random_date(1970, 2000) -- Generate a random birthdate between 1970 and 2000

local salary = math.random(30000, 100000) + math.random() -- Generate a random salary with decimals

local is_active = (math.random() > 0.5) and "TRUE" or "FALSE"

local rating = string.format("%.1f", math.random(10, 50) / 10) -- Random rating between 1.0 and 5.0

local visit_count = math.random(1, 500) -- Random visit count

local query = string.format("INSERT INTO benchmark_table (name, birthdate, salary, is_active, created_at, rating, visit_count) VALUES ('%s', '%s', %.2f, %s, NOW(), %s, %d)",

name, birthdate, salary, is_active, rating, visit_count)

con:query(query)

end

function thread_done(thread_id)

con:disconnect()

endIndexes were added in this order:

1. CREATE INDEX idx_name ON benchmark_table (name);

2. CREATE INDEX idx_birthdate ON benchmark_table (birthdate);

3. CREATE INDEX idx_salary ON benchmark_table (salary);

4. CREATE INDEX idx_is_active ON benchmark_table (is_active);

5. CREATE INDEX idx_created_at ON benchmark_table (created_at);

6. CREATE INDEX idx_rating ON benchmark_table (rating);

7. CREATE INDEX idx_visit_count ON benchmark_table (visit_count);Results

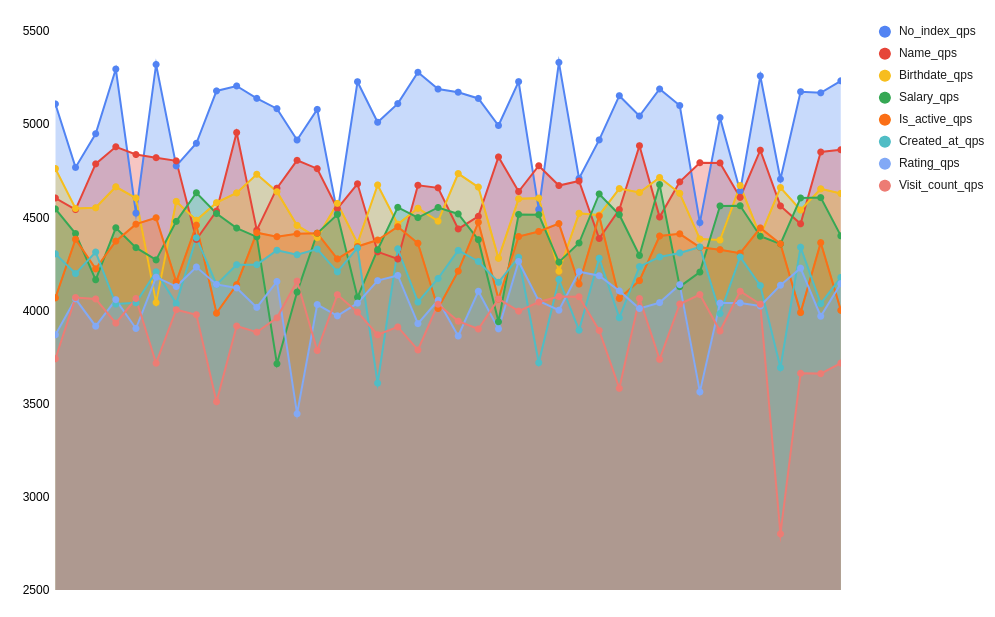

Keep in mind indexes are compounded. So, Visit_count_qps for example consists of all other columns having index, plus a visit_count.

Queries per second - higher is better

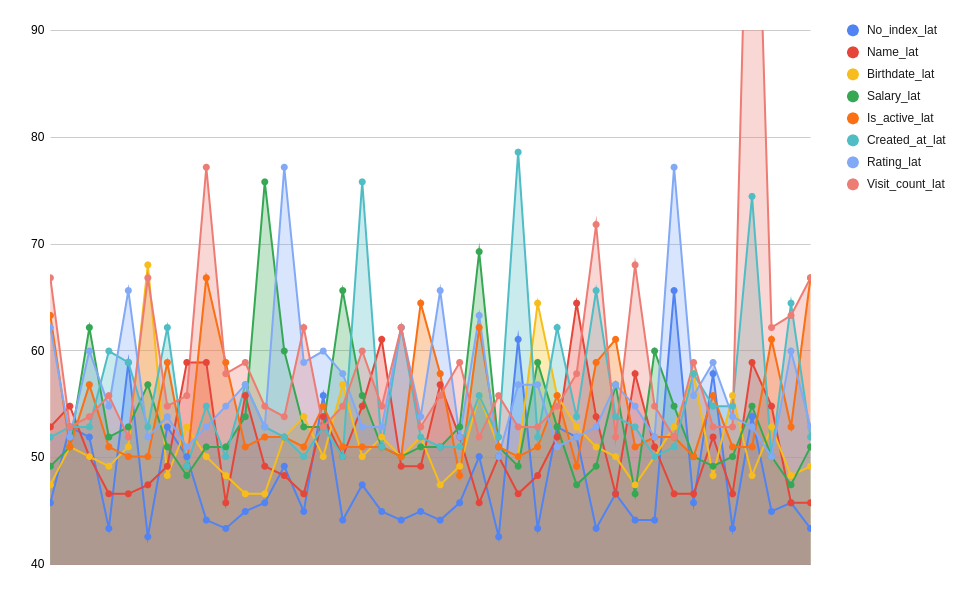

Latency (ms) - less is better:

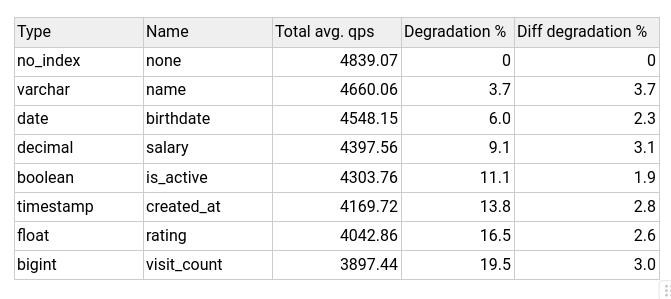

Since above graphs might be a bit noisy and hard to read, I also prepared drill-down of degradation of performance per column type added:

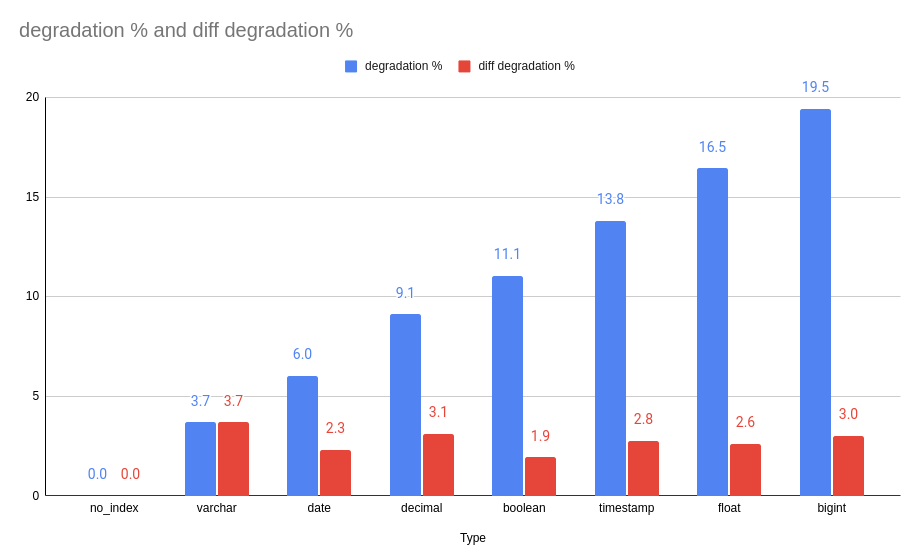

And visual graph of above table:

Summary

Here it is, magic answer to your questions on how much degradation in writes can you expect per index type. As we can see some types are a little worse than the others, but in general, they all add certain penalty. Boolean being one of the best indexes and varchar being one of the worst column types you could choose to add index on. Keep in mind that with MySQL, adding an index also increases table/disk size, and by the rule of thumb, if you have more than 30% of additional disk claimed by indexes, you are probably abusing indexes too much and should rethink your strategy.